User Defined Functions

Automatic v/s Manual

There are two types of people in this world, one who like everything ready to use and others who want to tailor everything according to the requirement. Both have their own pros and cons.Only 18% of Americans can actually drive a manual and remaining prefer an automatic. The fun and control you get while driving a manual can’t be explained, I my self prefer driving a manual. It might be an over head in the start but once you get hold of it, it is way better in comparison to an automatic whether you are going for the acceleration or the economy except you are driving a Tesla 🙂

Apache Spark’s data processing user defined functions falls under the same tailor made category, I have read many article where the focus was more on pushing engineers away from them but in real UDF’s are not bad they are just complex. One cannot use a UDF in any scenario but there are use cases where a UDF can give you huge performance benefits with little bit tweaking. UDF’s are black box for the spark optimizer and it is unable to optimize the UDF code that is the main issue.

Today I am sharing a use case where using a UDF saved almost 50% of the processing overhead by decreasing the memory footprint for the complex business logic.

Scenario

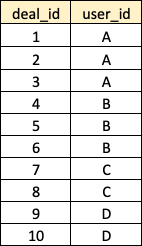

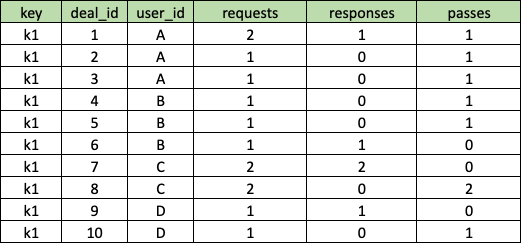

We are taking example of a real time bidding system and showing multiple no. shopping deals on a product to the end users and the users can decide to enable any of the active deals on the product while bidding with a response.

– A user can activate only one deal per checkout.

– we are logging all activated deals on a product, how ever deals are user specific so every user can only see deals activated on his id based on geo, previous sales, coupons.

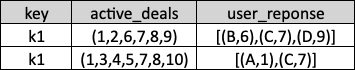

logs

key : Any combination of keys.

active_deals : Deals activated on a bidding auction in real time.

user_timeout: Users who timed out while bidding.

user_response: valid responses of users.

Reporting:

– We need to report on total requests, responses and timeouts.

– Account the no. of times users decided to pass on a specific deal in request.

Output:

– Requests : 13

– Responses : 5

– Passes: 8

Solution 1 (Spark API)

We can solve this by using the spark data frame api without adding any extra overhead.

– Get the no. of requests by opening the active_deals.

– Explode the user responses and calculate the responses

– Subtract responses from request to get the passes.